How we plan agile features without floating over the waterfall

Long before I started at SiteMinder, when I was just starting as a professional developer, every project I worked on was a strict waterfall process – solution outline, macro design, micro design, build and test. My experience was that the project plan on these initiatives was like a tinpot dictator – reasonable and well-meaning at first but becoming increasingly unhinged as requirements changed and surprises arose, continuing to demand obedience even as its demands became uncoupled from reality.

Relationships between teams and colleagues frayed as deadlines were missed and parents were separated from their families as the inevitable death-march began. It was a generally stressful time for everyone involved. Experiences like this are etched in the memory of most people who’ve had to work under a waterfall process, and as such it’s not surprising that since agile has became mainstream, most development teams I’ve worked in have chosen to send their tyrant to the guillotine and do away with as much upfront planning and architecture as they can.

I was as happy as anyone to see this revolution come, but over time I’ve come to realise that while we made a big step forward in throwing out this regime, there was a small step backward that went with it. By welcoming change we increased our ability to respond to it, but with little upfront research we end up responding to avoidable surprises as much as changing requirements. The power to self-organise has made small, co-located teams far more effective, but as teams grow and become more distributed, having no central plan means that developers are more likely to find themselves blocked or completing work that overlaps with others.

I was as happy as anyone to see this revolution come, but over time I’ve come to realise that while we made a big step forward in throwing out this regime, there was a small step backward that went with it. By welcoming change we increased our ability to respond to it, but with little upfront research we end up responding to avoidable surprises as much as changing requirements. The power to self-organise has made small, co-located teams far more effective, but as teams grow and become more distributed, having no central plan means that developers are more likely to find themselves blocked or completing work that overlaps with others.

There are a few approaches to this problem, ranging from simply accepting it as a consequence of the change, to creating so much process to deal with it that it becomes fake agile. There is certainly value to trying to find a middle ground here – in the software industry, we tend to spend a lot of attention on developers who work 10x faster than others, or technologies that can make individuals write code faster, but in my experience the simplest way to complete a feature 10x faster is to plan well enough that 10 people can work on it at once without interfering with each other – and it can be done without lapsing back into a waterfall-esque way of working.

SiteMinder is a hotel commerce platform that empowers hotels with a range of capabilities, like setting up a rate once and pushing it out to all the booking websites they list on, or setting up their own booking engine on their website. In the Multi-Property team at SiteMinder, we tie all these hotel-level capabilities together in a product that works for hotel groups and chains. Our aim is to make operations that would be mind-bogglingly complex to achieve manually happen at the press of a button for our users.

Given that we’re automating processes that can be complex, developing new features can be fraught with potential blockers, but the fast-changing nature of the hotel business means that we can’t afford to lose our agility either. In order to deliver reliably under these circumstances, we put a lot of emphasis on research and planning.

This post is a guide to how our team plans out a feature, in the hope that you can take some of the ideas here and apply them to your own work if you’re not doing something like this already. This isn’t intended to be a methodology – rather a snapshot of our current process, that we’re constantly improving week-to-week and month-to-month.

Before you start thinking too hard about how to solve a problem, you need a lucid understanding of what you’re solving and why, and you want to have this written down.

Writing down the context enables you to leave a trail of breadcrumbs so that those who are implementing the feature are able to understand why you made the decisions that you did, and change them if they need to. The key to making a plan agile rather than rigid is to transfer enough context so that the plan can be reworked when necessary, and hence keep the whole process of developing a feature responsive to change. We’d do well to learn from military planning here, which has a famous history of plans needing to be adapted, and puts a lot of emphasis on defining the problem such that context gets transferred from planner to implementer.

Writing down the context enables you to leave a trail of breadcrumbs so that those who are implementing the feature are able to understand why you made the decisions that you did, and change them if they need to. The key to making a plan agile rather than rigid is to transfer enough context so that the plan can be reworked when necessary, and hence keep the whole process of developing a feature responsive to change. We’d do well to learn from military planning here, which has a famous history of plans needing to be adapted, and puts a lot of emphasis on defining the problem such that context gets transferred from planner to implementer.

The other advantage to setting up the context in writing is that clear writing means clear thinking. The process of writing out a problem actually causes you to realise that you didn’t have as clear an understanding as you thought that you did, and causes you to seek clarification. I find the process of writing a clear, concise description of the problem and context is a great way of discovering misunderstandings or gaps in my knowledge that I wouldn’t have realised otherwise.

What to write for a problem/context definition varies from feature to feature, but I usually try to at least think about writing these points:

tl;dr

A one-sentence statement about what we’re doing, mainly so that someone stumbling across the page on confluence can quickly figure out whether this is relevant to them.

What’s the background?

How did we get to the point of wanting to do this? Did another product develop a similar feature that was very popular and we want to do it too? Maybe we had an outage and we need to take steps to prevent another one?

Who are we doing this for?

This is important because in trying to come up with a solution, the easiest way to optimise for simplicity is to go all the way back to the users and stakeholders and ask what they actually need.

Why are we doing this?

Every product competes with “do nothing”, and so does this feature. Why does this need to be done? What makes it the next most important thing? This is important because you might realise that you’d be better off encouraging users to use a work around, or you might realise that something else is more important, especially as the research you do later gives you a better understanding of how much work is actually involved.

What are we optimising for?

Are we trying to ship this as quickly as possible? Perform important refactors that will enable future implementation? Are we trying to reengineer something to handle an expected increase in scale? This is important because without defining what you’re optimising for upfront, it’s tempting to optimise for everything, which usually means overengineering.

Before you leap into the technical solution, first it pays to establish a non-technical vision of what we want the future to look like after we’ve implemented this feature. The purpose here is to:

- Show the input that went into the technical design

- Confirm that you understand what the product team wants you to build, in language that you can both understand

- Provide a source of truth for what’s agreed between engineering and product as requirements are negotiated

- Provide something to refer back to as the technical design changes, in order to ensure that the technical design stays consistent with the use cases that we’re trying to support, and doesn’t lead to scope creep

This is the easiest step to skip, as there’s a good chance that you already have something like this from a product owner, product manager or business analyst, and you might not have the ability to negotiate what’s going to be built with them. Our team is fortunate enough to have a relationship between tech and product where what we want to achieve with a feature is negotiable, particularly when certain requirements have a low value that’s out of proportion with the amount of effort that’s going to be needed to implement them. This kind of insight often isn’t known until research and design are well underway, and hence when changes are agreed to the scope of what we’re going to build, they end up in this section.

This is where we start to get into the real meat of planning out a feature – figuring out the technical details of how we’re actually going to do the thing.

There are a few objectives here:

- Document what alternatives were considered and crucially, why we made the decisions that we did

- Create a single source of truth for what we’re going to build that can be referred to while we build it

- Figure out the best (and simplest) design that solves the problem, as defined in our “what we want to achieve” step

- Fact-check assumptions and known-unknowns

The end goal is to produce a write-up of what we want the end-state of the product to be with this feature built, and what needs to change in order to get there. The format of this changes depending on what is actually being produced, but usually I’ll document:

- An ERD for the new state of the data model

- An architecture diagram with any new components and how they fit in

- A written explanation of any changes made – e.g. if I’m adding a bit of functionality to an existing component, then the architecture diagram will be the same, but I still want to make sure that there’s a written explanation of what will change within it

- Where there’s been decisions made, an enumeration of the (reasonable) alternatives and a rationale for why a certain one was picked

Show your working

The objective of the design is to give anyone reading it an idea of not just what we want to do, but also why we’re doing it and what was factored into the design. The sad fact that needs to be kept in mind is that the design produced is probably at least a little bit wrong, and even in the unlikely event that it’s perfect now, it’ll likely become wrong in the future, because either external factors or our own understanding will change.

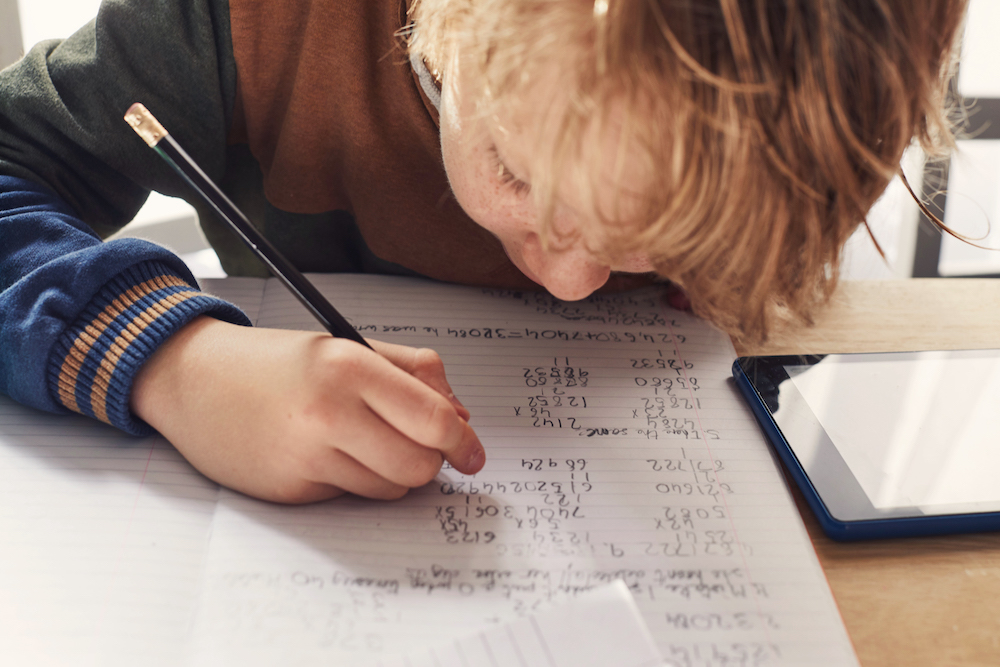

When you did maths in school, you probably got told to show your working so that if you got the last step wrong you could work your way back through the problem and see where you made a mistake – this is similar. Once we’ve found a problem with a design, then documentation that simply describes an end-state without context or explanation of why decisions were made is useless – all we can do is throw it away and start again. Documentation that describes context, assumptions and why decisions were made can continue to be used to inform whatever the next iteration is. As always, the idea isn’t to write orders for the implementers to follow, it’s to give them all the information they need to adapt – the only difference between screwing and science is writing it down!

Documentation that takes the reader on the journey of how decisions were made also invites them to check the reasoning behind them, and makes it much more likely that mistakes will be caught before implementation, either by you or whoever reads it. Writing out how you expect a feature to be implemented ends up working a bit like rubber-duck debugging at an architectural level – the process of making decisions and trying to justify them to a reader pushes you to think of ways to make things simpler and more resilient, and pushes you to negotiate with product around where scope can be altered in order to create a simpler and quicker-to-implement design.

A single source of truth

A single source of truth

The other benefit of writing down the technical details of the implementation is to serve as a communication tool – it creates a single source of truth that any number of people can refer to in order to be on the same page about what’s going to happen. While face-to-face communication is better than writing, and working software is better than documentation, at this point we don’t have working software yet, and face-to-face communication becomes harder as teams grow and our ways of working become remote-first.

SiteMinder now has over 100 engineers collaborating from their homes and offices across the globe – my team is evenly split between engineers working from Australia and the Philippines, 3 hours of time difference and over 6000km apart. Just writing the thing down and letting everyone make comments or edit still works well regardless of team size, working location, schedules and timezones, and at the end you get a record to refer back to for free.

What keeps this from being wagile is that the documentation isn’t driving the people, the people are driving the documentation… the idea here isn’t that one person writes something down and everyone else gets in line with it, rather it’s that one (or more) people write up what they think should be done, and then that write-up becomes the medium of collaboration.

Assumptions, unknowns and plan continuation bias

The other benefit of taking the time to think something through and writing it down is that it flushes out the assumptions and known-unknowns hiding in the design and compels you to actually do the work to make sure that the world looks the same as you hope it does.

For instance, you might already know that in order to implement a feature you’ll need to call another system’s API to get data – as part of writing down the design, you might call it with Postman or check the swagger to make sure that it has everything that you need, at which point you realise that this particular endpoint system was missing a few fields. This might mean that you need to go to a different system, or wait for that team to add the extra data, or maybe this hiccup leads to a full redesign of the feature, or go back to the drawing board to consider whether it’s worth doing at all (good thing you defined why it was important above!).

At this point, all of these options are actually pretty cheap because you’ve barely done any work yet. If you’d started implementing without trying to flesh out the design first, you might have started by setting up the handler for your API, then written code to retrieve some relevant data out of your database, then started on the client for the other team’s API and only then realised that there’s a problem. Now you’re stuck, because all the options above have become expensive. If you redesign your solution, you throw away everything you’ve done so far, if you wait for the other team to add extra fields then you’re blocked until they do, and either way until this gets resolved you’re blocking the front-end that’s going to use your API – the front-end blocks the rollout of the feature, the rollout of the feature blocks the strategy of the organisation and so on. When we write code, we want to avoid blocking wherever we can, and how we work has a lot of the same constraints.

Even worse than just the blocking, is that if you’ve already sunk loads of work into doing something a certain way, you’re really unlikely to tear that work up in order to do something different, even if that’s a much better way – this is called plan-continuation bias, and when it’s not causing shipwrecks, it’s causing nasty architecture. The less work you’ve sunk in at the point you realise you’ve made a mistake, the greater the chance you’ll fix it properly – and a few paragraphs and a diagram in a wiki article is pretty easy to let go of.

Spikes

Depending on the amount of research that has to go on, it might be worth distributing some of the load by getting other people to investigate certain questions or build prototypes. Usually we manage this by creating spike tasks that go into the normal sprint, well ahead of when we formally start work on a feature. This is important to do, because you don’t want to get into a habit of certain people only doing design and others only doing implementation – organising a team in this way leads the designers to get out of touch with the ground-truth of how implementation actually works, and removes the insight that the implementers could add to research and design.

Once you’ve got a decently fleshed-out idea of what it is you want to do, you’ve got to figure out how you’re going to do it. We have more traditional agile user stories, which are written by the product team, and then arrange more specific technical tasks around them, either as blockers or as subtasks. Usually I find it’s easiest to write out the tasks as groups of bullet points, then move on to fleshing them out once I’m happy with the breakdown and order. I feel that breaking down technical work into tasks is a really underappreciated and important art that has a lot in common with designing a distributed software system – you’re trying to increase concurrency, reduce contention, avoid blocking, keep your tasks decoupled and ensure compatibility between your components.

Concurrency

You want to organise the work so that the highest number of people possible can work at once. This is usually done by splitting up tasks such that they can be worked on independently, but avoiding making tasks too small that the overhead of getting things reviewed and tested actually makes things slower.

Contention

Avoiding a situation where developers working on concurrent tasks end up stepping on each other’s toes or creating merge conflicts with each other. If possible, try to separate concurrent tasks so that the eventual pull requests for them won’t be touching the same files, or if they must, the changes to common files will be simple insertions that won’t cause merge strife.

This conflicts with concurrency a bit – in general I tend to favour high concurrency over low contention, but I mitigate it by calling out in the ticket where there’s other tickets that might contend with this one so that the developers know to talk to each other.

Keep in mind that even tasks that seem completely orthogonal can contend with each other if they end up touching the same common files – particularly this happens if two developers both decide to refactor a common file at the same time! This is hard to avoid when breaking down work, but try to keep it in mind when work is being scheduled.

Blocking

Wherever possible you want to avoid one task blocking another. For instance, where there’s a separate frontend task that depends on a backend API being created, you might think about defining another ticket to make sure that API has a stub so that the FE can start before the BE is finished.

Decoupling

This overlaps with avoiding blocking and can be tricky, but in general you want to break down work in a way where if a task needs to be changed, it won’t ripple across all the other tasks in the feature.

This is best avoided by making tasks follow API boundaries. E.g. if you have a task to implement a Front-End component, a task to implement an back-end-for-front-end query for the FE, and a task to implement the API that the BEEF calls, then a change in one task shouldn’t affect the others as long as none of the APIs change.

Conversely, if you have tasks that cut across multiple components, then a change to one task is likely to affect the scope of others… however, sometimes this is the best way to get something done.

Compatibility

You want to try to make sure that merging the PR for a task is never going to put the entire application in an non-releasable state. This means thinking about how features are going to be feature-flagged and migrations are going to occur etc when you break down work, and making that a part of acceptance criteria. In particular, where one task changes an API and another task changes the consumer of that API to work with the new change, try to insist that the first change is backwards compatible.

When breaking down work it’s pretty usual to find places where the solution design itself could be:

- Simpler

- Better described

- More easily implemented (in particular, sometimes changing the design a bit can make breaking it into tasks much easier)

- Less wrong

Going back and changing the design as part of the breakdown process isn’t a failure, it’s part of the magic of doing this level of planning upfront. It’s also inevitable that requirements will change, or you’ll realise that you’ve missed something, and it’s important to embrace this as part of the process. In this case, by all means change the plan! The point at which you can’t change the plan is the point that you’ve entered wagile-land, and you need to rethink how you’re working.

There is nuance to how you manage that change though – if a feature is already being actively coded, you want to disrupt the team as little as possible. Where changes involve additions or extra requirements, try to favour creating new tasks or changing tasks that haven’t been through backlog grooming yet over changing tasks that have been groomed, especially ones that are already being worked on.

For instance, there have been times where I’ve started with a design that calls for building new functionality into an existing system, and only realised once we’ve begun that separating them out into a new system would make later deployments and maintenance much easier. In this situation, I reworked the plan so that the functionality would be initially built into the existing system and then moved out, so that the change didn’t immediately affect what was being worked on, but added a few extra chores towards the end of the epic.

There will of course be occasions where something being worked on is so completely wrong that it needs to be immediately changed or stopped – if this must be done, by all means do so. This is where breaking down tasks in a way that reduces blocking and coupling really helps – ideally if a change needs to be made, it should only affect one or two tasks rather than all of them.

Once we’ve figured out the structure of the tasks we want to do, we can flesh them out. We tend to put effort into quite detailed tasks that are intended to give a developer all the information they need to implement a small chunk of a feature.

This process runs counter to conventional agile wisdom, which tends to hold that detailed tasks are a sign that the team is slipping into working according to rigid plans. I think we need a bit more wiggle-room on this – overthrowing waterfall is all well and good, but for a revolution to give way to stability, compromises need to be made. If you’re in the same room as a handful of colleagues, one-sentence story tasks work great because you can simply call out and ask if someone’s already refactoring this method, or whether you should generate a client for the Galactus API as part of this story, or where the scope of this should end.

Once you grow beyond a number of developers that can be easily counted on your fingers and everyone’s working remotely from multiple timezones, the previously taken-for-granted communication that enables all of this comes under a lot more pressure, and the result is blockers, rework, frustration and low velocity.

The key here is that our tasks aren’t intended as a command-and-control mechanism. Rather, they’re intended to:

- Asynchronously communicate the context of the task and transfer all relevant knowledge to the person who’ll perform the task, without them having to re-do any research

- Clearly spell out what needs to be done

- Provide clear boundaries for what is in scope and out of scope, in order to inform:

– The developer who’s got to do the task

– The tester(s) who have to test it

– Other developers who might be trying to figure out where a certain thing will be done - Be negotiable!

Usually the tech-lead of the team will write out the initial task, and it’ll then be reviewed by a developer and go through a round of backlog-grooming by the team before being added to a sprint. The team has the freedom to push back or ask for clarification at any time – if this means changing the plan, so be it – that’s what it’s for. For the most part I find that our developers are pretty happy with this system: it’s more fun to write code with all the information in front of you than be chasing people for clarifications, and there’s plenty of opportunities to get involved with clarification-chasing as part of spikes earlier on.

When writing tickets, I try to link back to the design document wherever I can as this is much easier to keep up to date than many tasks or story cards, but inevitably there will be some duplication of information between that and the tasks. More important than avoiding duplication is to make absolutely sure that the developer completing the task has all the information they need, and that they have it at their fingertips. Personally when I write tasks, I try to imagine my audience as someone who’s only skimmed the design document and zoned out during backlog grooming – if you’re sick of repeating yourself, then they’re only beginning to understand the message.

The rough template I use goes like this. You don’t have to follow it exactly, or at all, but this might help you think about what you should include.The only really important part is that there should always be acceptance criteria or something like it, because that’s how the developer, code reviewer, tester etc all align on what the definition of done for the ticket is. No acceptance criteria = arguments, stress and wasted time.

Intro / Context

I start writing up a task by introducing it and establishing some context. Above, I put a bunch of emphasis on documenting the overall context for the feature, and now we’ve got to establish how this task fits into the overall plan. The goal here is similar to when we write down the feature-level context: we want to give individuals enough background information that they can adapt to changing circumstances where they need to, and spot where there’s gaps or mistakes in the overall task breakdown so that they can be spotted as early as possible. This is important, because when breaking down tasks you’re viewing the entire feature from 10,000 feet, and it’s easy to make mistakes that are much more obvious to the person who actually has to perform the work.

I recommend including:

- Where we should be up to when we start the task – e.g. “in TASK-421 we added this database table, so now we can add the API endpoint to suit it”

- How this fits into the overall plan – e.g. “creating this mock api will unblock all of the FE tasks”

- If relevant, what parts of the overall context relate to this task – e.g. if the task to implement OAuth will enable users from a new client to log in, make sure to mention it

Description (usually doesn’t have a heading)

This is a brief, free-text explanation of what this task does without technical detail, such that it can be understood by anybody regardless of their technical background. E.g. if you’re connecting to a new API, you’d say “In this task we’ll establish a connection to the Galactus service so that we can retrieve user’s birthdays”, rather than “In this task we’ll make a GET call to the Galactus/birthday endpoint in order to retrieve ISO Timestamps”. This is here mainly so that someone can establish at a glance what this task is doing, without having to get into the weeds of how it’s doing it.

Technical Notes

Anything that you’ve learned that’s relevant. In general if you can you want to link to information rather than copy-paste it, because if you copy something and it changes, you need to copy-paste it again, and if you forget to copy-paste it the ticket ends up getting done wrong. Examples of other information includes:

- Swagger files of an API to consume

- Relevant code files in our repos

- Relevant code files in other repos

- Parts of the design (particular for proposed SDLs, ERDs, swagger files)

- Relevant Slack conversations

- Etc.

If you can link out to these resources, then do so, even if they’re easy to find… the less friction there is in finding these resources, the more likely that they’ll actually be used. If you’ve got an idea of how this should be implemented at a low-level, feel free to put it in but try to write it as a suggestion rather than an instruction. Keep in mind that:

- Writing code to someone’s exact specifications is usually not very fun for the developer doing it

- Often you miss a minor detail that makes your idea of how to implement something impossible, and it puts the developer in an awkward position if they’ve got a ticket to implement something in a way that can’t possibly work.

- You might have a more senior title than the person who ends up picking up the ticket, but chances are that they do more coding than you do, and might have better ideas!

Testing Notes

Anything you know about how to test this that’s relevant. This usually comes up when integrating with external systems – if you know that the external system has to be in a certain state to do a certain thing then make sure you document both what that state is, and how to manipulate the external system into it! Don’t assume that this is obvious – what’s obvious to you after days of doing research might be a complete mystery to someone who’s coming to it for the first time.

Out of Scope

Even if something being out of scope is implied by its absence from the acceptance criteria, it’s usually better to be as explicit as possible about what’s out of scope, even to the point of being slightly condescending, because misunderstandings here can get very expensive. Particularly watch out for:

- Functionality that is or was specifically covered in another task

- Things that are normally done that don’t apply to this ticket (e.g. for a ticket to temporarily mock an API, call out that integration testing the mock isn’t in scope)

- Where you’re specifically calling for something to be done hackily (e.g. you need a quick fix for some poor-quality code ahead of a refactor, write down that you’re not expecting a refactor)

Acceptance Criteria

The most important part. This should be a list of as-objective-as-possible criteria that, if they’re all true, mean that this task is complete.

If possible I try to write these from the perspective of someone trying to test, not someone trying to implement it – e.g.:

- For a ticket that hits the UI, try to write ACs along the lines of “The button turns red after it’s pressed once” rather than “There’s a < button > element with an onClick handler that sets the colour state to “#FF0000” (there’s no need for the tester to get their colour dropper out)

- For an API ticket, try to write along the lines of “when querying against the Property, it’s possible to retrieve the property name” rather than “a property-name resolver has been created”

Unfortunately, it’s not always possible to keep technical details out… e.g. occasionally you need to break the rule about specifying specific implementation details and write down exactly what’s going to change in the code. In that case try to write some ACs for the code reviewer (e.g. “the doThisAndDoThat function has been split into doThis and doThat) and write regression ACs for the tester (e.g. “the doThis screen still works as it did before”).

In general I try to keep implementation details out of ACs, but it sometimes makes sense to put them in when you know that certain parts of the implementation are going to be annoying to do and are likely to be skipped – e.g. I often put an AC around writing an integration test against the API, because I find that without that it’ll often get looked over.

I’ve been reading Will Larson’s Staff Engineer, and in the interviews the necessity and power of writing about work yet to be done comes up a lot. I assume that the organisations (mostly big tech orgs) that the interviewees come from have their own processes that the writing fits into, but I couldn’t find a lot of information about how these work, so I’ve tried to fill that gap by writing this down in detail, in particular explaining why this works and how it fits into conventional agile thinking. If you take away all that though, it really boils down to a couple of simple principles that most engineers would recognise:

- If you look ahead into the future, you’ll be able to do the work in the most efficient and predictable way (you can do branch prediction on your work)

- Do as much work that will affect or block other work (usually research and design) ahead of time, so that it’s not on the critical path when it gets done (essentially, prefetch these tasks)

- Don’t depend on synchronous communication to transfer context unless you’re a very small, co-located team (as scale and complexity increase, asynchronous messaging becomes more resilient)

Hopefully this gave you some food for thought – either ideas that you can adopt, an understanding of why this works for me but won’t work for you, or a really clear understanding about how I’m wrong!